The source dataset must be stored on Amazon Redshift In order to use Redshift to S3 sync, the following conditions are required: However, if you need to unload data from Redshift to S3, the sync recipe has a “Redshift to S3” engine that implements a faster path. Unloading data from Redshift directly to DSS using JDBC is reasonably fast. The schema of the input dataset must match the schema of the output dataset, and values stored in fields must be valid with respect to the declared Redshift column type. The S3 bucket and the Redshift cluster must be in the same Amazon AWS region The S3 side is stored with a CSV format, using the UTF-8 charsetįor the S3 side, the “Unix” CSV quoting style is not usedįor the S3 side, the “Escaping only” CSV quoting style is only supported if the quoting character is \įor the S3 side, the files must be all stored uncompressed, or all stored using the GZip compression format The destination dataset is stored on Redshift It will be used automatically if the following constraints are met: This is faster than automatic fast-write because it does not copy to the temporary location in S3 first. In addition to the automatic fast-write that happens transparently each time a recipe must write into Redshift, the Sync recipe also has an explicit “S3 to Redshift” engine. Note that when running visual recipes directly in-database, this does not apply, as the data does not move outside of the database.

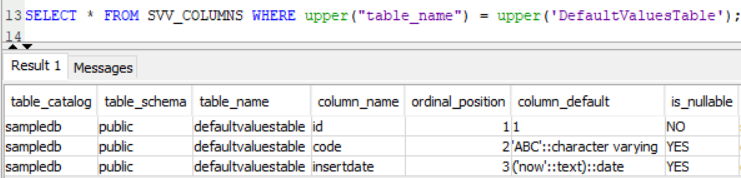

#Redshift database code#

This should not be a path containing datasets.ĭSS will now automatically use the optimal S3-to-Redshift copy mechanism when executing a recipe that needs to load data “from the outside” into Redshift, such as a code recipe. This is a temporary path that will be used in order to put temporary upload files. In “Path in connection”, enter a relative path to the root of the S3 connection, such as “redshift-tmp”. In “Auto fast write connection”, enter the name of the S3 connection to use Then, in the settings of the Redshift connection: The recommended way to load data into Redshift is through a bulk COPY from files stored in Amazon S3.ĭSS can automatically use this fast load method. Loading data into Redshift using the regular SQL “INSERT” or “COPY” statements is extremely inefficient (a few dozens of records per second) and should only be used for extremely small datasets. API Node & API Deployer: Real-time APIs.Automation scenarios, metrics, and checks.Controlling distribution and sort clauses.Setting up (Dataiku Custom or Dataiku Cloud Stacks).

0 kommentar(er)

0 kommentar(er)